AI Is Fuelling Riots, But It Can Also Help Mitigate Them

This last week the UK has been rocked by violent riots, spurred by racist rumours propagated through social media algorithms and group messaging. While the causes are different this time round, there are echoes of the 2011 London riots. Back then I lived in North London, and what I saw inspired the topic of my Masters' thesis at Oxford: predicting collective behaviour using AI and social media data. AI helped fuel those riots – and I wanted to understand them.

With the outsize role that social media platforms play in shaping collective behaviour, often in real-time, I figured social data would be a great place to start. Yet with the large volumes of messages, how to see the forest for the trees? A decade ago I turned to language analysis, specifically the classification of illocutionary speech acts. Using this framework, I looked at how the language of people on Twitter (now X) evolved over time as crowds morphed into mobs.

For example, some messages people post are assertives ("there is car on fire in the street") while others are commissives ("I am going to meet my friends") and yet others are expressives ("oh wow, that's crazy"). One can analyse how the ratio of questions to commissives changes as people travel to a hotspot and join in the violence. Similarly, the share of expressives can act as an emotional barometer as the mood of the crowd shifts – and show when to intervene.

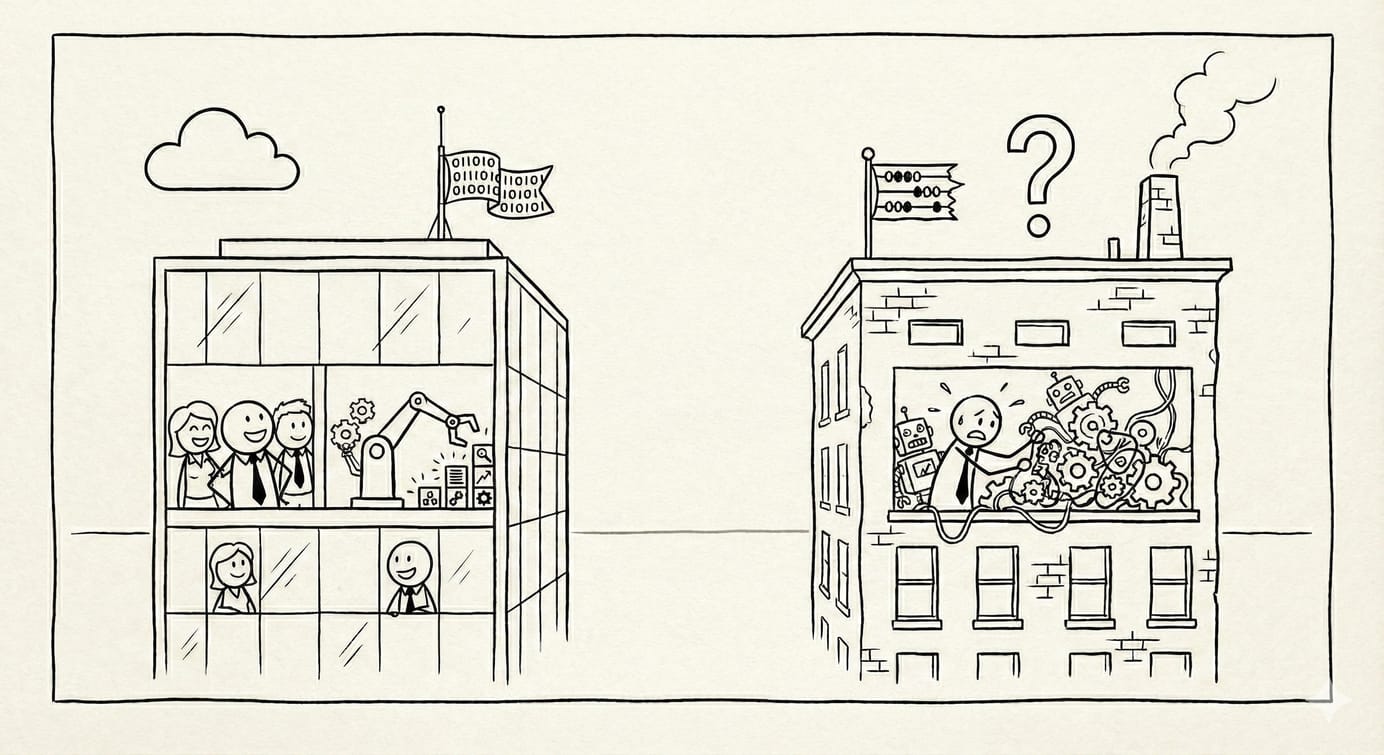

While much of the discussion around AI in the context of this summer's hate crimes has focused on the ways they help create and disseminate fake news, AI can play an important mitigating role as well. Facial recognition – recently proposed by the prime minister – is one of these areas but by no means the only one. As I found in my research, if analysed using the right tools social media data can provide rich insights into both the trajectory and the current state of a riot.

At the time, I was working with a small data set of ~67,000 labelled messages. Despite this limitation, I could identify predicable intervals where assertives peaked, as participants shared new information and it propagated across the collective. Being able to identify, isolate, and analyse these updates accelerates the process of establishing situational awareness and can help authorities decide whether an intervention is required and if so, what type of intervention.

Since I completed my graduate degree, the capabilities of AI models have increased exponentially. Whereas I had to painstakingly train my classifiers, a basic LLM can now do all the leg work. For example, try the following prompt: I will give you a number of phrases. Classify them by type of illocutionary act as defined by J.L. Austin: "I am going to go to the riot", "wow this is crazy", "that car is one fire", "why are people angry" Format the output as a table please.

While there is no doubt that AI technologies have played a negative role in the recent riots, it is helpful to recognise how they could be used in understanding, mitigating, and preventing them. The increased capabilities of modern language models in particular provide us with potent tools to parse the vast amounts of social media data being generated. We need to proactively harness these to help defuse the crisis on our streets, while not being oblivious to AI's pitfalls.

– Ryan

Cover image by DALL·E.

Q* - Qstar.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.