Failure Formula: Lessons to Learn From 2020 A Levels

The GCE Advanced Level qualifications, or A Levels, are the most important school leaving qualification in the British school system. They are a critical determinant of whether a student will be accepted by their chosen University. Usually hundreds of thousands of students would be celebrating their results in August, but not this year. As COVID-19 precluded sitting final exams, their final grades were determined by an algorithm. What could possibly go wrong?

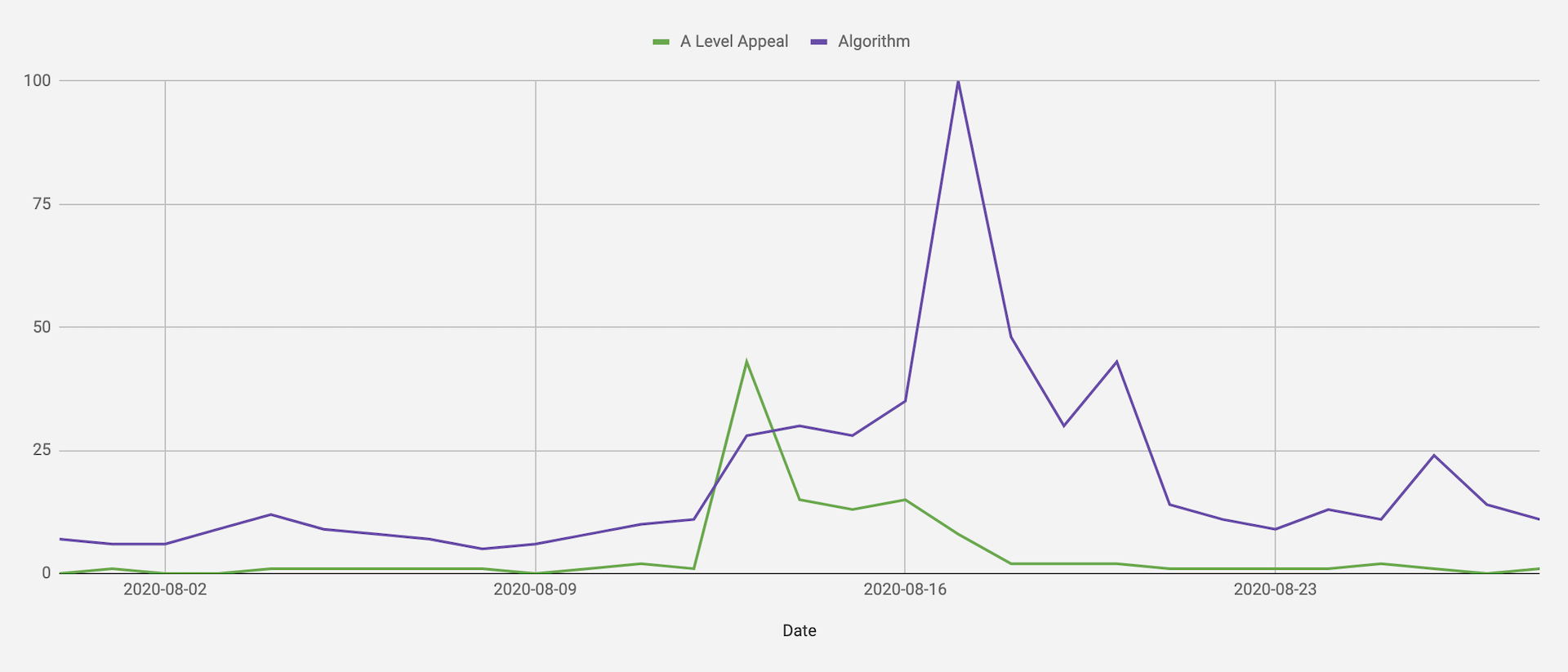

While 58.7% of the students received the grade their teachers had predicted, and 2.2% received at least one grade higher, a full 39.1% saw their results downgraded. This means that nearly 40% of the students risk losing their places at university, due to conditional offers made on predicted grades. The government announced it would be possible for students to appeal, but the damage was done. Students took to the street to protest the algorithm.

So what was this "mutant algorithm" as the British prime minister termed it? It turns out it was nothing special; there was not even any machine learning involved. As this excellent breakdown observes, it is really a simple equation – and yes, simple, as it only has four distinct terms to generate the final grade:

Pkj = (1-rj)Ckj + rj(Ckj + qkj - pkj)

Effectively it says that your grade is determined by the school's historical A level results combined with your class' performance on GCSEs – which are sat two years earlier – weighted by how strong a predictor GCSEs were for A levels in the past. If no class results are available, only historical school data is used.

Final grades should be a fair representation of a student's performance. Yet someone with poor GCSE grades who worked hard to improve would have been penalised. Alarmingly, someone who did well in classes but happened to attend a badly performing school could have been significantly impacted. As one student protested: "My teachers estimated me A*, A, A, and I got a C, B and a D, so I got downgraded by nine grades which is absolutely insane."

Despite the chaos we should hope that lessons are learned from this rather public debacle. Given the correlation between a school's academic performance and the socioeconomic status of their students, it is clear that the algorithm is biased. Yet this is by no means unprecedented. For years people have been warning about the pernicious biases in algorithms determining everything from parole conditions to loan applications, to limited protest. Being locked out of university is disappointing, but being locked up unfairly is terrible.

Paradoxically, one of the lessons we can draw from this fiasco is that if we are going to create automated solutions for performance evaluation, they need to be a lot more complex and intelligent than Ofqual's formula. While simplicity is admirable, people are too multifaceted to be easily circumscribed by four algebraic terms. This is one of the paradigm shifts of big data – that people and products can be assessed at an individual instead of an aggregate level.

At the same time, we need to ensure transparency both during model development and evaluation. While in the private sector aspects of algorithms (e.g. model architecture, weights, hyperparameters, etc.) might be confidential, it is critical that subjects understand on which criteria they will be evaluated. Ideally this discussion happens during the development process, instead of during a rushed post-mortem once the media pressure has become untenable.

Finally, it is important to reflect on the limitations of models to represent the world around us. As George Box noted: "All models are wrong, but some are useful." Being aware of how wrong a model is and taking accountability for trade-offs made (e.g. precision vs. recall, predictive power vs. simplicity) is paramount in building trust. Misquoting Burns: "The best-laid models of mice and men, go often askew." Like A Levels, what matters is that we learn.

– Ryan

Q* - Qstar.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.